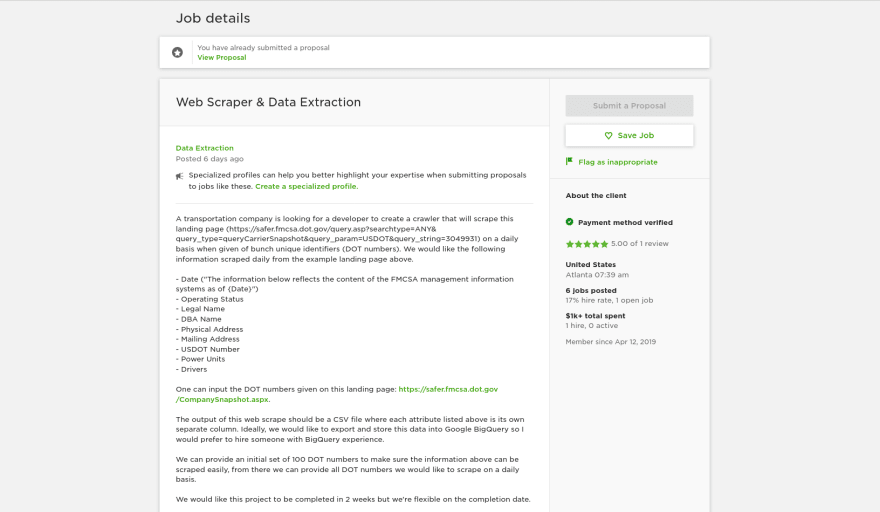

WRITE YOUR OWN WEBSCRAPER SOFTWARE

Tags: octoparse, octoparse download, web scraper, website copier, web scraping software Frees up resources, saving time, effort, and cost.Learns to bypass the latest blocking methods.Collect accurate data from any website, at any scale, and have it delivered to you on autopilot, in the format of your choice. Tags: luminati, bright data, residential proxy, luminati proxy, residential proxiesīright Data provides automated web data collection solutions for businesses and is the world’s most reliable proxy network. Deal with complex scenarios where other tools can't.Your bot will run in cloud servers even if you close your browser or shut down your computer.Make a bot in minutes, not in days or weeks.Save a lot of money on development cost.Like building blocks, a simple interface lets you create a bot in minutes. Extract data, monitor websites, and more without writing a single line of code. Create a bot to help you accomplish web-based tasks.

WRITE YOUR OWN WEBSCRAPER CODE

Tags:, automatio, no code chrome extension, no code chrome extension builder, nocoding data scraperĪutomatio easily handles the boring work so you don't have to. Some of the most common use cases of scraped data for businesses are:Īs the Internet has grown enormously and more and more businesses rely on data extraction and web automation, the need for scraping tools is increasing. It became an important process for businesses that make data-driven decisions. Web scraping could be useful for a large number of different industries, such as: Information Technology and Services, Financial Services, Marketing and Advertising, Insurance, Banking, Consulting, Online Media, etc. The data gets exported into a standardized format that is more useful for the user such as a CSV, JSON, Spreadsheet, or an API. This is done with different tools that simulate the human behavior of web surfing. Web scraping also called web data extraction is an automated process of collecting publicly available information from a website. Read the second part where we send out the tweets and tag our ISP for slow internet speed.Here you will find the ultimate list of web automation and data scraping tools for technical and non-technical people who wants to collect information from a website without hiring a developer or writing a code.īut before we dive into the list, let's talk a bit about web scraping.

Now just run your app and let’s see what you get!

For a good guide on hosting a bot on heroku please check out this great article. Once all of this has been completed we can host our bot on AWS or my personal recommendation heroku. Once you receive the email confirming your API keys be sure to copy them into your function so they function as expected. To learn what these tokens actually do I would recommend that you check out the tweepy documentation.

The consumer_key, consumer_secret, access_token and access_token_secret are all API keys provided to us by twitter and should be some long, unreadable string. The tweet function that we wrote will take one argument of ‘top post’ which is what we figured out in the scrape section. There’s a lot going on here so let’s slowly go through it. set_access_token ( access_token, access_token_secret ) api = tweepy. OAuthHandler ( consumer_key, consumer_secret ) auth.

strip () tweet ( top_post ) def tweet ( top_post ): consumer_key = "#" consumer_secret = "#" access_token = "#" access_token_secret = "#" auth = tweepy. find ( "h2", class_ = "crayons-story_title" ). find_all ( class_ = "crayons-story_indention" ) top_post = posts. find ( class_ = "articles-list crayons-layout_content" ) posts = home. From bs4 import BeautifulSoup import tweepy import requests def scrape (): page = requests.

0 kommentar(er)

0 kommentar(er)